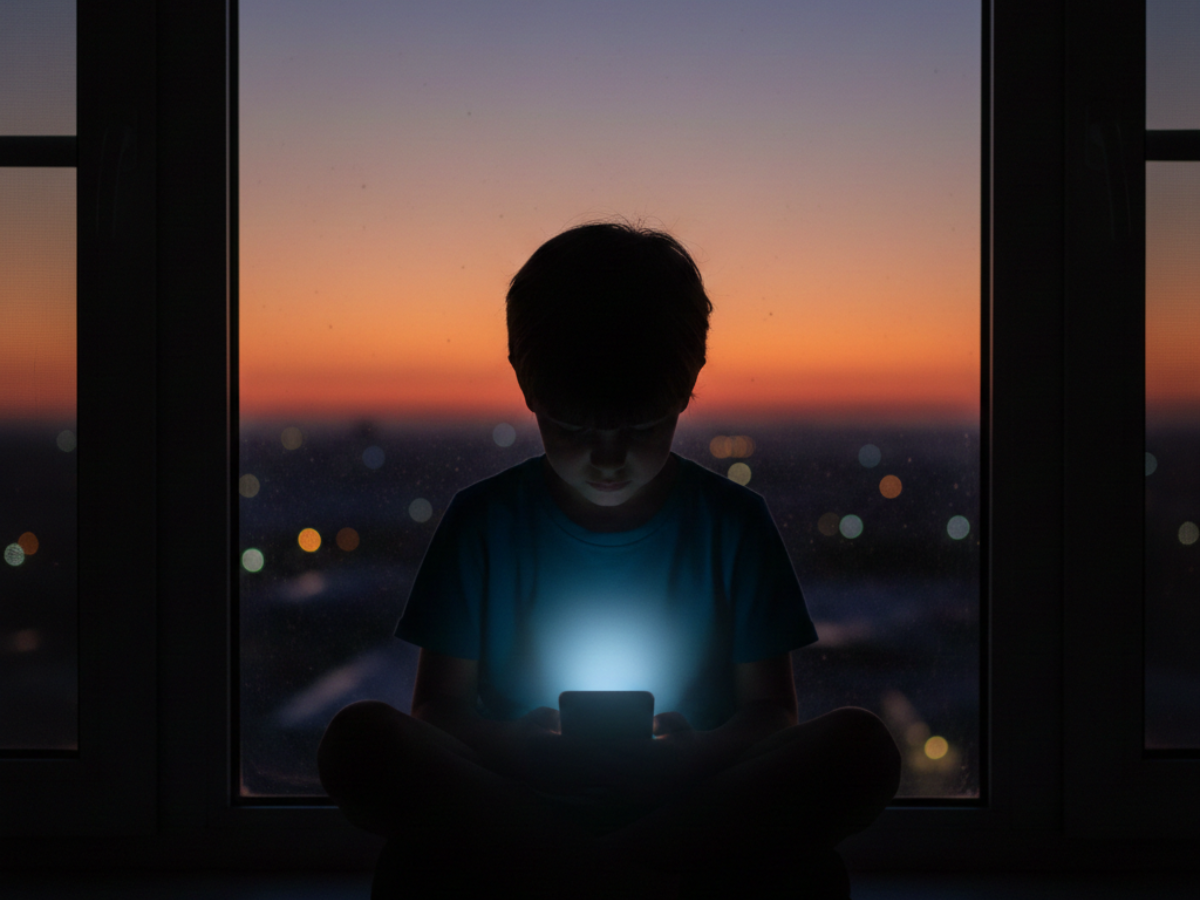

MUMBAI: Australia’s decision to ban social media for children under 16 has struck parents worldwide like lightning. For many, our immediate reaction was a fierce, instinctive nod of agreement. We have all watched the light fade from our children’s eyes as they doom-scroll through Tik Tok feeds. We have fought nightly battles over surrendered phones. We have worried about cyberbullying following them into bedrooms and impossible beauty standards greeting each morning. The notion that governments could simply step in and declare “Enough” feels, for a fleeting moment, like rescue.

Yet when the dust settles and we gaze upon our own children digital natives who never knew a world without connection the relief begins to curdle into doubt. Is this truly solution or surrender? Are we building walls to keep them safe, or admitting failure in teaching them how to swim?.

Global momentum is undeniable. Australia leads with hard bans, but stands not alone. Malaysia drafts similar laws, Europe debates raising digital consent age across the United States, from Florida to Utah, states attempt forcing platforms to seek parental permission. Governments’ message rings clear: Big Tech has abdicated responsibility, thus state must step in as ultimate parent. The dominoes continue falling Canada considers strict verification systems; Japan explores cultural approaches; Brazil links digital access to educational outcomes. The pattern emerges not by chance but through shared recognition that our children’s digital future requires collective stewardship beyond corporate interests or national borders. Yet watching my teenager navigate their world compels me to ask: Does ban truly solve loneliness, or merely isolate further?.

We know harms are real. Algorithms are designed addictive, they prey on insecurity and reward outrage. Yet for generations socializing, studying, exploring identity online, cutting access removes not just distraction but public square. Think teenagers finding anxiety support. LGBTQ youth in conservative towns discovering accepting communities. If we lock doors until 16 do we sever those lifelines?. Are we shielding from harm or cutting connections keeping some afloat?.

The paradox emerges: we protect children from curated perfection while potentially denying them access to authentic community. The digital realm offers not just connection but identity formation a space where marginalized youth discover voices previously unheard. When removed too soon, do we create voids that echo through development?.

Then enforcement presents practical nightmare. To ban children, platforms must identify them. Every user adult and child alike must prove age. Are we prepared to hand passports and face scans to companies we distrust with our data?. Feels like cruel irony. To protect children’s privacy from data-hungry corporations, we build surveillance infrastructure tracking every citizen’s identity. We tell children anonymity is dangerous, yet create worlds tracked from first click. As parent, I desire child safety from predators, but do I want them growing in digital panopticons where moves verify, log, and store?. Is safety the price of privacy?.

The verification systems themselves raise questions : will centralized databases become new targets for hackers?. Do we trade immediate protection for long-term vulnerability?. The challenge extends beyond technology to include human factors: parents overwhelmed by options, children navigating complex interfaces, platforms balancing security with accessibility.

Let us be honest about capacity. “Digital literacy alternative” teaching smarter online use often feels cop-out. Assumes parents know what we’re doing. Yet half the time, we match children’s screen addiction. How can police consumption when barely controlling our own?.

The “digital orphan problem” haunts me. Wealthy homes will find work arounds family accounts, VPNs, curated safe gardens. But children of working parents? Families lacking time or tech-savviness navigating verification systems?. Those children won’t merely be banned they’ll be exiled. They lose information flows peers use advancing. Creating two-tier childhood where rich gain “safe” digital citizenship and poor face exclusion.

Digital literacy cannot remain theoretical it must become practical, embedded in school curricula, community programs, and family routines. Without intentional cultivation, the gap widens, creating not just divides but developmental disparities that shape opportunities for decades.

Also consider danger migration. If Tik Tok and Instagram are off-limits, will children cease seeking connection? Or move to dark corners encrypted apps, unmoderated gaming lobbies, decentralised servers beyond regulator reach?. Driving them from noisy town squares into unlit alleyways?. Mainstream apps offer reporting tools, moderators, safety centres. Underground offers nothing. Ban feels definitive, yet prohibition historically drives vice underground, making it more dangerous and harder to police.

The pattern repeats through generations : when alcohol was banned, speakeasies emerged; when restrictions increased, innovation flourished in shadows. Today’s digital exiles may become tomorrow’s architects of safer platforms, having learned resilience through necessity rather than design.

Responsibility shift is profound. For years we blamed platforms, now governments intervene. Yet do they absolve tech giants?. If laws mandate “no kids under sixteen,” will Meta or Google simply shrug, declaring blocking sufficient?. Does ban let Big Tech avoid designing safe products?. Instead demanding apps safe by design non-addictive, non-tracking, non-harm-content we merely remove users. Resembles fixing dangerous intersections by banning pedestrians instead of installing traffic lights. We should demand safer internet for all, not children-free internet.

The accountability framework requires evolution beyond compliance to include proactive design principles. When platforms prioritize engagement over well-being through algorithmic choices, regulation must respond not just with age restrictions but with fundamental standards for digital environments.

Deepest question concerns trust. Teenage years are adulthood rehearsals messy, risky, mistake-filled. Thus resilience builds. If we scrub worlds clean of risk until sixteen, what unfolds on birthdays?. Do we throw them into algorithmic oceans without letting paddle in shallows?. “Apprenticeship” model graduated access, learning messaging before feeds, feeds before broadcasting seems more human. Treats children as digital citizens-in-training, not potential victims. Yet this requires nuance; nuance rarely makes headlines. Ban is sledgehammer, parenting scalpel.

The apprenticeship approach recognizes development as journey rather than destination , allowing exploration within boundaries that expand with competence and judgment. This scaffolding prepares youth not just for platforms but for participation in increasingly complex digital ecosystems. As global headlines reveal from Australia’s strict laws to US state battles we struggle putting genie back in bottle.

Right to be afraid. Current experiments on our children endured too long. Yet reaching for off switches demands wisdom over panic. International cooperation emerges not as luxury but necessity. When platforms operate across jurisdictions, fragmented regulations create loopholes and confusion. Harmonized standards balancing protection with participation offer framework that transcends national interests while respecting diverse cultural contexts.

Are we building fences to protect, or closing curtains avoiding outside observation?. For eventually they must walk through doors. And I fear banning worlds doesn’t prepare them merely delays collision. The path forward requires balance neither complete restriction nor unfettered access , but intentional design that honors both safety and growth. As parents, educators, and policymakers, we hold not just responsibility but opportunity to shape digital generations yet unborn.

When our children look back at this pivotal moment, will they see wisdom or missed chances?. The answer unfolds in choices made today between protection and preparation, between control and confidence, between solving today’s problems while preparing for tomorrow’s unknowns.

Brijesh Singh is a senior IPS officer and an author (@brijeshsingh on X). His latest book on ancient India, “The Cloud Chariot” (Penguin) is out on stands. Views are personal.