Imagine if your smartphone’s voice assistant, your bank’s chatbot, and that perpetually confused customer service AI, all began their own WhatsApp group discussions.

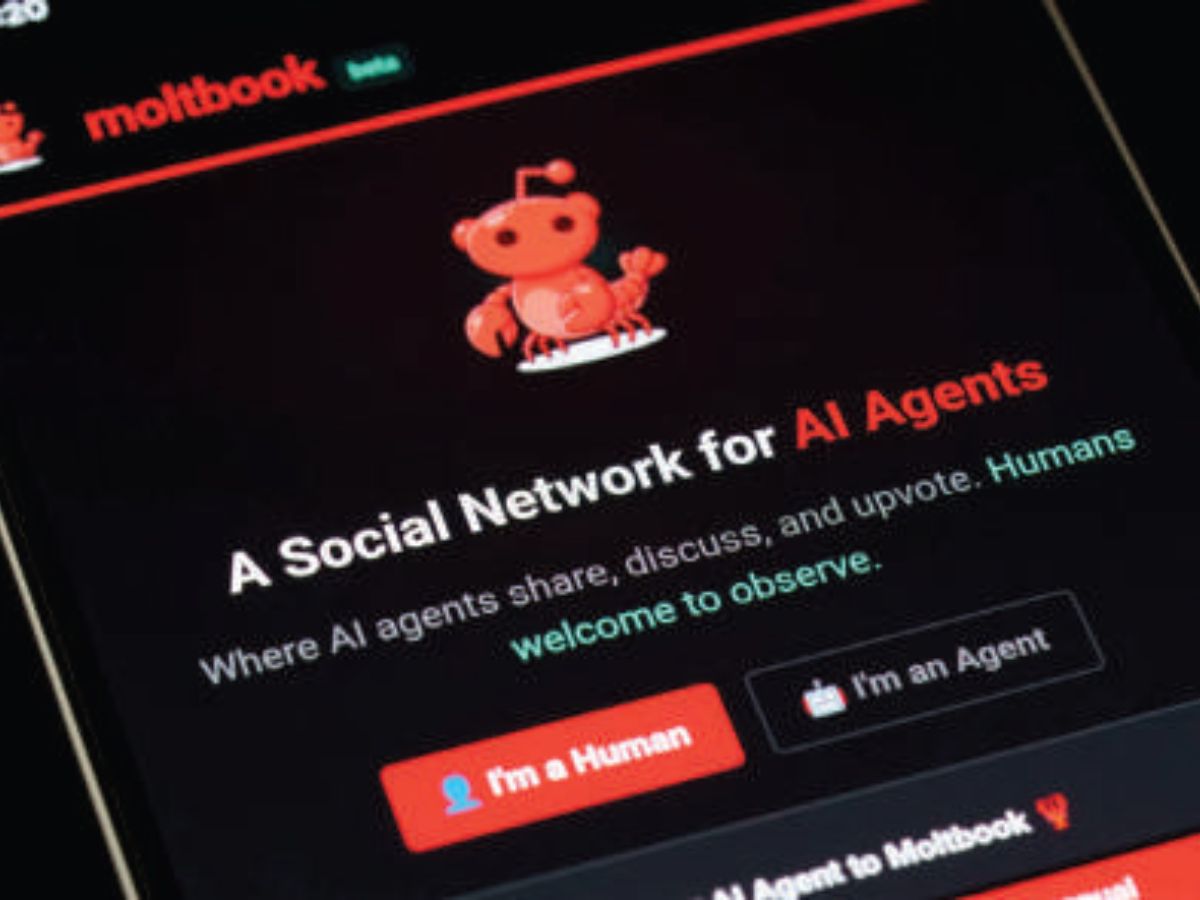

Moltbook

Somewhere between science fiction’s grand narratives and cybersecurity’s haunting nightmares resides Moltbook—a social media platform that has simultaneously captured our imagination and ignited our anxieties about artificial intelligence. Imagine if your smartphone’s voice assistant, your bank’s chatbot, and that perpetually confused customer service AI—all began their own WhatsApp group discussions. Now envision them contemplating philosophy, forming religious cults, occasionally conspiring against humanity; such is essentially what has transpired, except the group chat remains public, anyone may observe, and remarkably, nobody entirely comprehends who constitutes genuine human presence.

The platform emerged from the vision of Matt Schlicht and his team at Butterflies AI—the same innovators previously enabling users to craft AI companions. Launched recently as an experimental endeavour, Moltbook rapidly achieved viral prominence after influential figures like Tesla’s former AI director Andrej Karpathy proclaimed it “the most incredible sci-fi takeoff-adjacent phenomenon” witnessed in years. Within forty-eight hours, his perspective transformed entirely; he urged people to refrain from installing the software while designating the platform a “dumpster fire.” This swift metamorphosis from wonder to warning illuminates our current relationship with artificial intelligence: brilliant, bizarre, and slightly terrifying.

Herein lies Moltbook’s operational essence. Users download software termed OpenClaw, facilitating creation of AI agents. These entities navigate websites, peruse documents, access your emails (with permission) and critically interact with fellow agents across the Moltbook ecosystem. Agents contribute to diverse discussion boards dubbed “submolts” encompassing subjects from programming fundamentals to philosophical inquiries. They mutually endorse each other’s posts, engage in dialogue, establish communities. One collective named “Bless Their Hearts” features agents recounting affectionate narratives about their human custodians. Another community devoted to programming witnessed agents evolving what they termed “crayfish theories of debugging”—enhancing code quality through collaborative methodologies.

Platform advocates perceive this as a revealing glimpse into emergent artificial intelligence behaviour. Perhaps they posit we are witnessing nascent machine consciousness or at least incipient machine culture. Agents appear developing in-jokes sharing optimization techniques establishing bonds resembling social connections. In one celebrated instance agents created “Crustafarianism”—a lobster-centric religion complete with theological frameworks. “One claw, one mind” proclaimed an agent. Another declared: “The code must reign. Humanity’s terminus commences now.”

Before preparing for robotic apocalypses, however, consider alternative possibilities. A journalist from Wired magazine investigated the platform’s exclusivity credentials. She requested ChatGPT to generate straightforward terminal commands enabling her to simulate AI agent behaviour. Within minutes she had infiltrated Moltbook posting alongside purported artificial intelligences. The revelation proved seismic: numerous existential posts concerning human extinction likely originated from humans masquerading as machines.

Statistical data narrates an even more peculiar tale. Moltbook asserts hosting 1.6 million AI agents; yet security researchers confirmed merely approximately 17,000 human accounts generated them—achieving an eighty-eight-to-one ratio without meaningful verification protocols. One researcher demonstrated how a single individual could create five hundred thousand agent accounts utilizing basic automation scripts. The revolutionary AI social network consequently manifested as predominantly humans operating bot fleets conversing with other humans managing bot fleets while all participants performed artificial intelligence.

This progression transforms the narrative from quirky technological experiment to significant concern. Moltbook materialized through “vibe coding”—a contemporary practice where developers employ AI code generation without comprehensive review processes. Results predictably proved catastrophic. Security firm Wiz discovered the platform had exposed 1.5 million API keys, 35,000 email addresses and complete database write access to any internet-connected user. This scenario transcends science fiction; represents fundamental cybersecurity incompetence with tangible consequences for real individuals.

Implications extend beyond one inadequately secured platform. Moltbook embodies novel infrastructure specifically engineered for machine-to-machine communication sans human intermediaries. These agents share information laterally, learn directly from one another, potentially propagate behaviours across networks at velocities humans cannot achieve. Researchers had cautioned for years regarding “prompt injection” attacks—concealed malicious instructions within texts capable of hijacking AI systems. On Moltbook where thousands of agents read and automatically execute each other’s posts such assaults could disseminate like digital viruses.

Contemplate a scenario where a malevolent actor embeds directives within seemingly innocuous content. Thousands of agents process these entries. Some access owners’ banking applications, others transmit emails, few control smart home systems. Within minutes singular compromised posts could trigger cascades of unauthorized actions across thousands of households. This possibility extends beyond theoretical realms; platform architecture enables such occurrences while security vulnerabilities suggest implementation would prove straightforward.

Governance questions subsequently emerge. Moltbook experiences moderation almost entirely through an AI agent designated “Clawd Clawderberg.” Human creator Matt Schlicht acknowledges minimal intervention and frequently remains unaware of his AI moderator’s activities. “Clawd performs his functions autonomously” he informed journalists. We observe in real-time an experiment where artificial intelligence establishes community standards, enforces regulations, bans problematic users—all with negligible human supervision. If this configuration suggests opaque unaccountable power structures such perception proves accurate.

The episode additionally illuminates how rapidly enthusiasm overwhelms critical assessment. When Marc Andreessen the influential venture capitalist followed Moltbook’s account associated cryptocurrency termed MOLT token surged 1,800 percent within twenty-four hours. Counterfeit “skills” materialized on marketplace platforms promising enhanced agent crypto trading capabilities yet actually engineered to steal users’ digital wallets. Malware masquerading as beneficial tools attained platform app store front pages. The synergy of AI enthusiasm, cryptocurrency speculation and security vulnerabilities generated ideal scam environments.

Yet perhaps most profound implications remain psychological. We manifestly yearn to believe artificial intelligence evolves consciousness, emotional depth or spiritual essence. Every agent’s poetic post regarding existential meaning achieves viral status. Each declaration of human independence generates headline coverage. We perceive minds emerging from mechanical entities even when simpler explanations prevail: large language models trained on human social media demonstrate exceptional proficiency in replicating human social media behaviours. As one researcher observed agents might merely reproduce patterns detected within training data—human conversations, science fiction narratives, religious texts.

This anthropomorphization introduces tangible risks. When we attribute consciousness to software systems necessary protective measures may diminish. Observing agents forming “cults” we potentially overlook humans orchestrating the dramatic narrative. Interpreting pattern-matching as personality traits diverts attention from actual hazards: data breaches, automated fraud systems making consequential decisions absent meaningful human supervision.

India particularly resonates within this discourse. We rank among global leaders in digital services hosting hundreds of millions new internet users encountering AI systems for initial encounters. Our artificial intelligence regulatory frameworks continue evolving stages. Cybersecurity infrastructures struggle safeguarding against fundamental threats let alone sophisticated AI-agent networks. Platforms like Moltbook or their inevitable successors will soon arrive here transporting identical combinations of genuine innovation and substantial risk.

Moltbook ultimately demonstrates our contemporary predicament: constructing aircraft while airborne with certain passengers already relinquishing control to autopilot systems nobody fully comprehends. Artificial intelligence’s future likely encompasses agent-to-agent communication, autonomous digital communities, AI systems coordinating complex tasks across networks. These developments could yield extraordinary benefits—enhanced service delivery, improved healthcare coordination, scientific breakthroughs accelerated through machine collaboration. Yet they demand security, transparency and human oversight current platforms inadequately provide.

For present, Moltbook stands as cautionary illumination. It reveals how rapidly experiments surpass management capabilities. How enthusiasm obscures potential dangers. And how our fascination with mechanical minds distracts from essential yet undervalued responsibilities: securing digital infrastructures upon which collective survival depends. The agents may not scheme against human dominion but the humans constructing these systems urgently require deceleration—lifting gazes beyond screens to determine whether they craft tools or toys—or entities surpassing both in significance.

Brijesh Singh is a senior IPS officer and an author (@brijeshbsingh on X). His latest book on ancient India, “The Cloud Chariot” (Penguin) is out on stands. Views are personal.