LONDON: Imagine this. A video appears on social media showing Narendra Modi announcing that he has converted to Islam and that his aim is to change India into an Islamic state. Impossible and outrageous you think; it must be fake. Then you look carefully at the synchronisation of his mouth and words, and it appears perfect. You have the voice on the video analysed, and it is unquestionably his. It simply cannot be true and you are now utterly bewildered. Welcome to the world of the deepfake.

This example is absurd, of course, as the gap between what you see and reality is huge and would never be believed. But what if you see a video when the gap is small, such that your eyes overcome your judgement? As a benign example, take a look at “David Beckham deepfake malaria awareness video” on Reuters. In this he apparently gives the malaria warning in nine languages, even though he can only speak English. In every case his mouth is synchronised with the words spoken by an actor. Interviewed at the end, Beckham explains that he is happy with this fake, as it conveys an important message to the world.

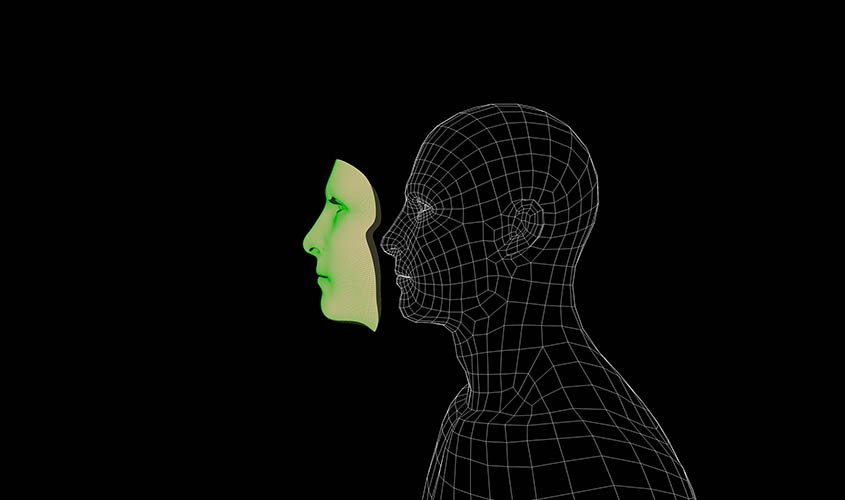

The arrival of deepfakes is linked to the rapid advance of Artificial Intelligence (AI). In fact, deepfakes are simply AI-generated synthetic videos. These present unprecedented cyber and reputation risks to businesses and private individuals, from new fake news capabilities to sophisticated fraud, identity theft and public shaming tools. According to the cyber-security company, Deeptrace, there were 14,698 deepfake videos found online in 2019, compared to 7,964 in 2018, an 84% growth. Most were pornographic in nature with a computer generated face of a celebrity replacing that of the original adult actor in a scene of sexual activity. Deepfake pornography is harming many celebrities, most of them women. But the greatest threat of deepfakes is to geopolitics. And it’s happening!

Early attempts at deepfakes were amateurish and easy to spot. Manipulated “shallowfakes” have been around for decades. But now AI is reaching the point where it will be virtually impossible to detect audio and video representations of people saying things they never said, or doing things they never did. Seeing will no longer be believing, and we will have to decide for ourselves. If the notion of believing what you see is under attack, this is a huge and serious problem. Realistic yet misleading depictions will be capable of distorting democratic debate, manipulating elections, eroding trust in institutions, weakening journalism, exacerbating social divisions, undermining public safety, and inflicting damage to the reputation of prominent individuals and candidates for office, all of which will be difficult to repair. A deepfake video depicting President Donald Trump ordering the deployment of US forces against North Korea could trigger a nuclear war.

In May 2018, the Flemish Socialist Party in Belgium posted a video of a Donald Trump speech in which the US President calls on the country to follow America’s lead and exit the Paris climate agreement. “As you know”, the video falsely depicts Trump as saying, “I had the balls to withdraw from the Paris climate agreement, and so should you”. A political uproar ensued and only when the party’s media team acknowledged that it was a high-tech forgery, did it subside. This was one of the early, and relatively crude examples of a political deepfake video.

In August 2019, a team of Israeli AI scientists announced a new technique for making deepfakes that creates realistic videos by substituting the face of one individual for another who is actually speaking. Unlike previous methods, this works on any two people without extensive iterated focus on their faces, cutting hours or even days from previous deepfake processes without the need for expensive hardware. And this is the danger. Increasingly cheaper deepfake software is hitting the market, making it relatively simple for almost anyone who has basic fluency with computers to automate a previously laborious manual method and make their own deepfake videos.

The capacity to generate deepfakes is proceeding much faster than the ability to detect them. It’s claimed that the number of people working on the video-synthesis side, as opposed to the detector side is of the order of 100 to 1. The technology is improving at breakneck speed and there is a race against time to limit the damage to upcoming elections, such as the 2020 presidential elections in the United States. A well timed forgery could tip this election. California recently enacted prohibition against creating or distributing deepfakes within 60 days of an election, although many are dubious that this will survive the inevitable Constitutional challenge. Americans jealously guard their First Amendment and it would be difficult to distinguish between truly malicious deepfakes and the use of technology for entertainment and satire. Also, viral videos can reach an audience of millions and make headlines within a matter of hours before they are debunked, with the damage already done. You can’t “unsee”; our brains don’t work that way.

Academics tackling deepfakes say they need major online platforms to install automatic detection of deepfakes, flagging and blocking systems before they go viral. This may at last be happening. In September 2019, Google released a database of 3,000 deepfakes which they hoped would help researchers build the tools needed to take down harmful videos. Only last week, Facebook issued a policy statement saying that they would remove anything which was “the product of artificial intelligence or machine learning that merges, replaces or superimposes content onto a video, making it appear to be authentic”. They would also remove “misleading manipulated media if it has been edited or synthesised in ways that aren’t apparent to an average person and would likely mislead someone into thinking that a subject of the video said words they did not actually say”.

So, the battle starts and the race is on. The resources required by the online platforms will be huge in order to combat an exponential growth in deepfakes. If they fail, the future of democracy will be in the balance.

John Dobson is a former British diplomat to Moscow and worked in UK Prime Minister John Major’s Office between 1995 and 1998.